5.2.1 Geometry¶

$$ \require{color} \definecolor{brightblue}{rgb}{.267, .298, .812} \definecolor{darkblue}{rgb}{0.0, 0.0, 1.0} \definecolor{palepink}{rgb}{1, .73, .8} \definecolor{softmagenta}{rgb}{.99,.34,.86} \definecolor{blueviolet}{rgb}{.537,.192,.937} \definecolor{jonquil}{rgb}{.949,.792,.098} \definecolor{shockingpink}{rgb}{1, 0, .741} \definecolor{royalblue}{rgb}{0, .341, .914} \definecolor{alien}{rgb}{.529,.914,.067} \definecolor{crimson}{rgb}{1, .094, .271} \definecolor{indigo}{rgb}{.8, 0, .6} \def\ihat{\hat{\mmlToken{mi}[mathvariant="bold"]{ı}}} \def\jhat{\hat{\mmlToken{mi}[mathvariant="bold"]{ȷ}}} \def\khat{\hat{\mathsf{k}}} \def\tombstone{\unicode{x220E}} \def\contradiction{\unicode{x2A33}} \def\textregistered{^{\unicode{xAE}}} \def\trademark{^{\unicode{x54}\unicode{x4D}}} $$

Inner Products and Orthogonality

Inner Products and Orthogonality

( This video is long. You should take a break or two as you watch this video.)

We have discussed the geometry of vector space $\mathbb{R}^n$ for any positive integer $n$ using the Euclidean scalar product, or dot product. While a great many mathematical models using linear algebra rely on these Euclidean Spaces, we can extend our using of scalar products to include non-Euclidean geometries on the vector space $\mathbb{R}^n$. We can also extend our use of scalar product to describe geometries on more abstract vector spaces.

A scalar product defined on a vector space is called an inner product. The Euclidean inner product on vector space $\mathbb{R}^n$ is called a dot product. An inner product on a vector space endows the vector space with the notions of magnitude or norm and direction. This means that adding an inner product to a vector space gives it a geometry. Together, the vector space and its inner product is called an inner product space.

5.2.2 Weighted Inner Product¶

Example 1¶

One can define a non-Euclidean inner product on the vector space $\mathbb{R}^n$. Given a vector $\mathbf{w}\in\mathbb{R}^n$ with positive entries one defines a weighted inner product on $\mathbb{R}^n$ by

$$ \langle \mathbf{x},\mathbf{y} \rangle\,= \displaystyle\sum_{i=1}^n x_iy_iw_i = x_1y_1w_1 + x_2y_2w_2 + \cdots + x_ny_nw_n $$

This will work just as well as the Euclidean inner product on $\mathbb{R}^n$. Then for all vectors $x$, $y$, $z\in\mathbb{R}^n$ and scalars $\alpha$, $\beta\in\mathbb{R}$,

- The inner product is positive definite.

$$ \langle \mathbf{x},\mathbf{x}\rangle\,= \displaystyle\sum_{i=1}^n x_ix_iw_i = \displaystyle\sum_{i=1}^n x_i^2w_i \ge 0 $$

since $x_i^2\gt 0$ and $w_i\gt 0$ for all $1\le i\le n$. Furthermore, if $\langle \mathbf{x},\mathbf{x}\rangle\,=\,0$, then$$ \begin{align*} \displaystyle\sum_{i=1}^n x_i^2w_i &= 0 \\ x_i^2w_i &= 0 \qquad\qquad 1\le i\le n \\ x_i^2 &= 0 \qquad\qquad 1\le i\le n \\ x_i &= 0 \qquad\qquad 1\le i\le n \end{align*} $$

Thus $\mathbf{x}$ is the zero vector $\mathbf{0}$.

- The inner product is commutative

$$ \langle \mathbf{x},\mathbf{y}\rangle\,= \displaystyle\sum_{i=1}^n x_iy_iw_i = \displaystyle\sum_{i=1}^n y_ix_iw_i =\,\langle \mathbf{y},\mathbf{x}\rangle $$ - The inner product is multi-linear

$$ \begin{align*} \langle \alpha\mathbf{x} + \beta\mathbf{y}, \mathbf{z}\rangle &= \displaystyle\sum_{i=1}^n (\alpha x_i + \beta y_i)z_iw_i \\ &= \alpha\displaystyle\sum_{i=1}^n x_iz_iw_i + \beta\displaystyle\sum_{i=1}^n y_iz_iw_i \\ &=\alpha\langle\mathbf{x}, \mathbf{z}\rangle\,+\,\beta\langle\mathbf{y}, \mathbf{z}\rangle \end{align*} $$

Notice that using properties 2. and 3. together give us

$$ \langle \mathbf{x}, \alpha\mathbf{y} + \beta\mathbf{z}\rangle = \langle \alpha\mathbf{y} + \beta\mathbf{z}, \mathbf{x}\rangle = \alpha\langle\mathbf{y}, \mathbf{x}\rangle + \beta\langle\mathbf{z}, \mathbf{x}\rangle = \alpha\langle\mathbf{x}, \mathbf{y}\rangle + \beta\langle\mathbf{x}, \mathbf{z}\rangle $$

5.2.3 Low Pass Filter¶

Example 2¶

In the A440 Pitch Standard, middle C is 261.626 hz. The octaves of the $C$ pitch is given by

| Scientific designation | Frequency (hz) | Name | | --- | --- | -- | | $C_{-1}$ | 8.176 | | $C_0$ | 16.352 | | $C_1$ | 32.703 | | $C_2$ | 65.406 | | $C_3$ | 130.813 | | $C_4$ | 261.626 | Middle C | | $C_5$ | 523.251 | | $C_6$ | 1046.502 | | $C_7$ | 2093.005 | | $C_8$ | 4186.009 | | $C_9$ | 8372.018 | | $C_{10}$ | 16744.036 |

A frequency vector $\mathbf{v}\in\mathbb{R}^{12}$ is a list of 12 magnitudes or intensities for each of the frequencies listed in the table above. A low pass filter will scale the high and middle frequency elements of the vector by a small number. If one chooses

$$ \mathbf{w} = [ 1,\ 1,\ 1,\ 1,\ .1,\ .1\,\ .1,\ .1,\ .01,\ .01,\ .01,\ .01 ]^T $$

the resulting weighted inner product will produce a geometry that leaves low frequency elements of the vector unchanged but diminish the middle and high frequency elements of the vector. The low frequency elements will have significantly more influence on the magnitude of a frequency vector.

The magnitude of the frequency vector

$$ \mathbf{v} = [ 1,\ 0,\ 0,\ 0,\ 0,\ 0,\ 0,\ 0,\ 0,\ 1,\ 1,\ 1,\ 1 ]^T $$

will be

$$

\left\|\mathbf{v}\right\| = \sqrt{ 1^2 + 1^2(.01) + 1^2(.01) + 1^2(.01) + 1^2(.01)} = \sqrt{1.04} \approx 1.0198

$$

5.2.4 Frobenius Inner Product¶

Example 3¶

Recall that using matrix addition and scalar multiplication of matrices we have the vector space of all $m\times n$ matrices

$$ \mathbb{R}^{m\times n} := \left\{ A = [a_{ij}]\,:\, a_{ij}\in\mathbb{R},\ 1\le i\le m,\ 1\le j\le n\right\} $$

One may define an inner product on the vector space $\mathbb{R}^{m\times n}$ similar to the Euclidean inner product on $\mathbb{R}^{mn}$. For any vectors $A$, $B\in\mathbb{R}^{m\times n}$,

$$ \langle A,B\rangle_F\,:=\,\displaystyle\sum_{i=1}^m\,\displaystyle\sum_{j=1}^n a_{ij}b_{ij} $$

This is the Frobenius inner product on the vector space $\mathbb{R}^{m\times n}$.

5.2.5 Statistics and Sampling¶

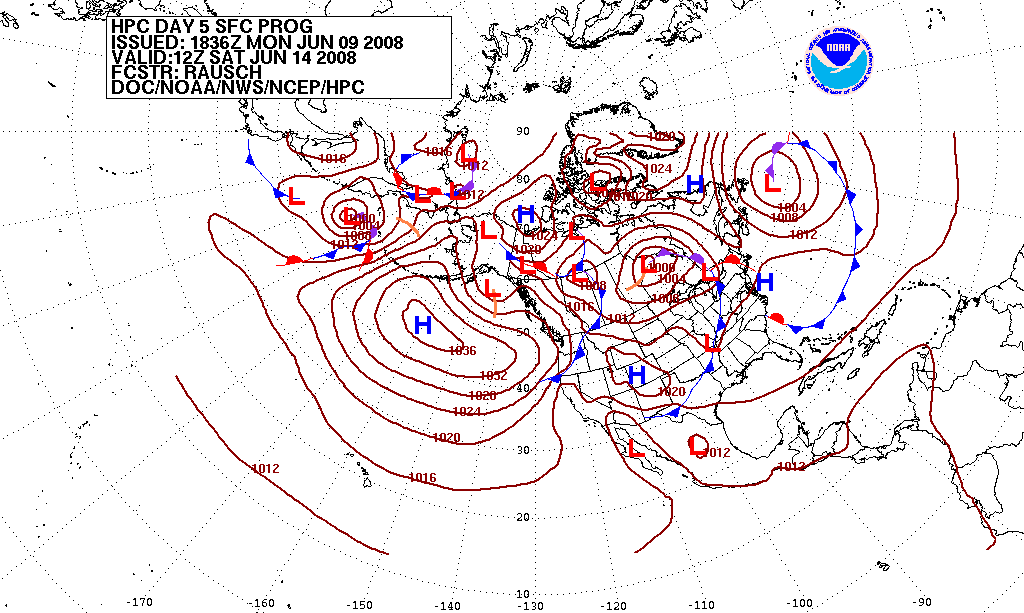

This is a file from the Wikimedia Commons. Information about this image can be obtained from its

Description Page.

The mathematical models of complex systems such as weather, financial markets, disease transmission, and quality assurance are generally determined by measuring the state of the system at various systematically appointed locations in the population. Weather measurements are taken at many geographical locations. Financial data is collected for key products, businesses or markets. The key property these types of applications share is that they are very large and/or embody complicated interactions of the elements of the population. One must obtain a sample of the state of a complex system at a finite number of locations, and use the values of this sample to estimate parameters such as inner product within a mathematical model.

Example 4¶

On the vector space $P_n$ of polynomials of degree less than $n$, let $\{x_1,\ x_2,\ \dots,\ x_n\}$ be $n$ distinct (different) real numbers. For any two polynomials $p$, $q\in P_n$, one may define an inner product by

$$ \langle p,q\rangle\,:=\,\displaystyle\sum_{j=1}^n p(x_i)q(x_i) $$

Example 5¶

We may again choose a positive function $w(t)$ defined on $[a,b]$ and define the weighted inner product on $P_n$ for any two polynomials $p$, $q\in P_n$ by

$$ \langle p,q\rangle\,:=\,\displaystyle\sum_{j=1}^n p(x_i)q(x_i)w(x_i) $$

We can choose of these points $\{x_1,\ x_2,\ \dots,\ x_n\}$ to be evenly distributed in an interval $[a,b]$. These values can be considered a sample of the values in the interval. How these values are chosen comprise an academic field of its own.

Example 6¶

We may use a constant weighting factor for a sample of $n$ points in an interval $[a,b]$, and define the inner product on the vector space of all real polynomials $P$ by

$$ \langle p,q\rangle := \dfrac{b-a}{n}\displaystyle\sum_{i=1}^n p(x_i)q(x_i) $$

for any polynomial $p$, $q\in P$.

5.2.6 Integral Inner Products¶

If one increases the number of sampling points without bound, then for evenly spaced points in the interval $[a,b]$, $a=x_0\lt x_1\lt x_2\lt \dots \lt x_n=b$, one obtains $\Delta x = \frac{b-a}{n}$. Thus

$$ \langle p,q\rangle = \displaystyle\lim_{n\rightarrow\infty} \dfrac{1}{n}\displaystyle\sum_{i=1}^n p(x_i)q(x_i) = \displaystyle\lim_{n\rightarrow\infty} \displaystyle\sum_{i=1}^n p(x_i)q(x_i)\dfrac{1}{n} = \displaystyle\lim_{n\rightarrow\infty} \displaystyle\sum_{i=1}^n p(x_i)q(x_i)\Delta x $$

The last expression is a Riemann Sum so

$$ \langle p,q\rangle = \displaystyle\lim_{n\rightarrow\infty} \displaystyle\sum_{i=1}^n p(x_i)q(x_i)\Delta x = \displaystyle\int_a^b p(x)q(x)\,dx $$

Example 7¶

On the vector space of continuous functions $C[a,b]$ one may define an inner product for any function $f$, $g\in C[a,b]$ by

$$ \langle f,g\rangle\,:=\,\displaystyle\int_a^b f(t)g(t)\,dt $$

Example 8¶

In the vector space $C[a,b]$, we may choose a positive continuous function $w\in C[a,b]$. This function is called a weight function and one may define a weighted inner product for any continuous function $f$, $g\in C[a,b]$ by

$$ \langle f,g\rangle\,:=\,\displaystyle\int_a^b f(t)g(t)w(t)\,dt $$

Example 9¶

One may also choose a function defined on the interval $[a,b]$ so that its derivative is positive and continuous on the interval $[a,b]$. In this case we have $w\in C^1[a,b]$ and one may define a weighted inner product for any continuous functions $f$, $g\in C[a,b]$ by

$$ \langle f,g\rangle\,:=\,\displaystyle\int_a^b f(t)g(t)w'(t)dt = \displaystyle\int_a^b f(t)g(t) dw $$

This is called the Riemann Stieltjes integral and we used the differential $dw = w'(t)dt$ to define substitution in integral calculus.

Example 10¶

If we choose a positive function $w\in C^1[a,b]$ so that $\displaystyle\lim_{t\rightarrow -\infty} w(t) = 0$ and $\displaystyle\lim_{t\rightarrow\infty} w(t) = 1$, then we call this function a cumulative probability distribution function or distribution function. Using this distribution function we may define the probability density function $p(t) = w'(t)$. Now we may define the inner product on $C[a,b]$ for any two function $f$, $g\in C[a,b]$ by

$$ \langle f,g\rangle\,:=\,\displaystyle\int f(t)g(t)p(t)\,dt = \displaystyle\int f(t)g(t)w'(t)\,dt = \displaystyle\int f(t)g(t)\,dw $$

5.2.7 Properties of Inner Products¶

Definition Inner Product¶

An inner product on a vector space $V$ is a function $\langle\cdot,\cdot\rangle:V\times V\rightarrow\mathbb{R}$ such that for any vectors $x$, $y$, $z\in V$ and scalars $\alpha$, $\beta\in\mathbb{R}$,

$\langle \mathbf{x}, \mathbf{x}\rangle\,\ge\,0$ with equality if and only if $\mathbf{x}=\mathbf{0}$

$\langle \mathbf{x}, \mathbf{y}\rangle\,=\,\langle \mathbf{y}, \mathbf{x}\rangle$

$\langle \alpha\mathbf{x} + \beta\mathbf{y}, \mathbf{x} \rangle\,=\,\alpha\langle\mathbf{x}, \mathbf{y}\rangle + \beta\langle\mathbf{x}, \mathbf{z}\rangle$

This means that the inner product has two inputs, two vectors from our vector space, and one scalar output. The function must satisfy the three properties for any vectors $x$, $y$ and $z$ in our vector space and any two real number $\alpha$ and $\beta$. These are precisely the important properties of the dot product on vector space $\mathbb{R}^n$ that makes Euclidean space so useful.

Exercise 1¶

Show that the Frobenius inner product satisfies the definition of an inner product on the vector space $\mathbb{R}^{m\times n}$.

Follow Along

For any matrices $A$, $B$ and $C\in\mathbb{R}^{m\times n}$ and scalars $\alpha$, $\beta\in\mathbb{R}$,

- The Frobenius inner product is positive definite.

$$ \begin{align*} \langle A, A\rangle\,&\ge\,\displaystyle\sum_{i=1}^m\displaystyle\sum_{j=1}^n a_{ij}a_{ij} = \displaystyle\sum_{i=1}^m\displaystyle\sum_{j=1}^n a_{ij}^2 \ge 0. \end{align*} $$

Moreover $\langle A, A\rangle = 0$ if and only if $a_{ij}^2 = 0$ for all $1\le i\le m$ and $1\le j\le n$, since it is a sum of nonnegative numbers. Thus $\langle A, A\rangle = 0$ if and only if $a_{ij}=0$ for all $1\le i\le m$ and $1\le j\le n$. That is if and only if $A$ is the $m\times n$ zero matrix $\mathbf{0}$. - The Frobenius inner product is symmetric.

$$ \begin{align*} \langle A, B\rangle &= \displaystyle\sum_{i=1}^m\displaystyle\sum_{j=1}^n a_{ij}b_{ij} = \displaystyle\sum_{i=1}^m\displaystyle\sum_{j=1}^n b_{ij}a_{ij} = \langle B, A\rangle \end{align*} $$

- The Frobenius inner product is multi-linear.

$$ \begin{align*} \langle \alpha A + \beta B, C\rangle\, &= \langle \alpha[a_{ij}] + \beta[b_{ij}], [c_{ij}]\rangle \\ &= \langle \left[\alpha a_{ij} + \beta b_{ij}\right], [c_{ij}]\rangle \\ &= \displaystyle\sum_{i=1}^m\displaystyle\sum_{j=1}^n (\alpha a_{ij} + \beta b_{ij})c_{ij} \\ &= \alpha\displaystyle\sum_{i=1}^m\displaystyle\sum_{j=1}^n a_{ij}c_{ij} + \beta\displaystyle\sum_{i=1}^m\displaystyle\sum_{j=1}^n b_{ij}c_{ij} \\ &= \alpha\langle A, C\rangle + \beta\langle B, C\rangle \end{align*} $$

Example 4 (continued)¶

If $f\in C[a,b]$ is a continuous function and $f(x_0)\neq 0$ for some value $a\le x_0\le b$. The as $f$ is continuous at every point in the interval $[a,b]$, $f$ has no jump discontinuities or vertical asymptotes in this interval. This means that the graph of $f$ must rise above zero continuously to $f(x_0)\gt 0$ or descend below zero continuously to the $f(x_0)\lt 0$. As the product of continuous functions is continuous, we have that in either case, the graph of the function $f^2$ rises continuously above zero to $f(x_0)^2\gt 0$. Using Rolle's Theorem, there must be a subinterval $I=[c,d]$ of interval $[a,b]$ for which the continuous function $f^2(x)\gt \frac{f(x_0)^2}{2}$ for every point in $I$, and $a\lt c\lt d\lt b$. Thus

$$ \langle f, f\rangle = \displaystyle\int_a^b f^2(t)\,dt \ge \displaystyle\int_c^d f^2(t)\,dt \ge \displaystyle\int_c^d \frac{f(x_0)^2}{2}\,dt = \dfrac{f(x_0)^2}{2}(d-c) \gt 0 $$

Exercise 2¶

Show that the inner product in Example 4 satisfies condition two and three of the definition of an inner product.

Follow Along

For any continuous function $f$, $g$, $h\in C[a,b]$, and any scalars $\alpha$, $\beta\in\mathbb{R}$,

- We have already shown that this inner product is positive definite.

- This inner product is symmetric.

$$ \langle f, g\rangle = \displaystyle\int_a^b f(t)g(t)\,dt = \displaystyle\int_a^b g(t)f(t)\,dt = \langle g, f\rangle $$

- This inner product is multi-linear

$$ \begin{align*} \langle\alpha f + \beta g, h\rangle &= \displaystyle\int_a^b \left(\alpha f(t) + \beta g(t)\right)h(t)\,dt \\ &= \displaystyle\int_a^b \left(\alpha f(t)h(t) + \beta g(t)h(t)\right)\,dt \\ &= \alpha\displaystyle\int_a^b f(t)h(t)\,dt + \beta\displaystyle\int_a^b g(t)h(t)\,dt \\ &= \alpha\langle f, h\rangle + \beta\langle g, h\rangle \end{align*} $$

5.2.8 Magnitude of a Vector in an Inner Product Space¶

Definition Norm of a Vector in an Inner Product Space¶

If $\ \mathbf{u}$ is a vector in an inner product space $V$, then the norm or magnitude of the vector in this inner product space is defined by

$$ \|\mathbf{u}\| := \langle\mathbf{u}, \mathbf{u}\rangle^{1/2} $$

This means that we can define the magnitude in any abstract vector space if we can define a inner product on the vector space.

Example 2 (continued)¶

As a low pass filter is also defined using a positive vector in $\mathbb{R}^n$, we have that this also defines a weighted inner product on $\mathbb{R}^n$.

The first property of an inner product is important because it allows us to define a notion of magnitude in our inner product space. In example 2, the norm or magnitude of a frequency vector is defined for any frequency vector $\mathbf{f}\in\mathbb{R}^{12}$ by

$$ \|\mathbf{f}\| := \sqrt{\langle\mathbf{f}, \mathbf{f}\rangle} = \left(\displaystyle\sum_{i=1}^{12} f_i^2\right)^{1/2} $$

Example 3 (continued)¶

Consider the matrices

$$ A = \begin{bmatrix} 4\ &\ \ 2\ & -3\ \\ 2\ & -1\ &\ \ 2\ \end{bmatrix}\ \text{and}\ B = \begin{bmatrix} -3\ &\ 0\ &\ 2\ \\ \ \ 0\ &\ 1\ &\ 2\ \end{bmatrix} $$

in vector space $\mathbb{R}^{2\times 3}$ with the Frobenius inner product from Example 3. The inner product of matrices $A$ and $B$ is given by

$$ \begin{align*} \langle A, B\rangle_F &= \left[ (4)(-3) + (2)(0) + (-3)(2)\right] + \left[(2)(0) + (-1)(1) + (2)(2) \right] \\ &= -12 + 0 - 6 + 0 - 1 + 4 = -15 \end{align*} $$

The Frobenius norms of these matrices are

$$ \begin{align*} \|A\|_F &= \left( 4^2 + 2^2 + (-3)^2 + 2^2 + (-1)^2 + 2^2 \right)^{1/2} = \sqrt{38} \\ \\ \|B\|_F &= \left( (-3)^2 + 0^2 + 2^2 + 0^2 + 1^2 + 2^2 \right)^{1/2} = \sqrt{18} = 3\sqrt{2} \end{align*} $$

5.2.9 Angle Between Two Vectors¶

We define the angle between two vectors in an inner product space by using the definition of angle derived from Euclidean space.

Definition The Angle Between Two Vectors¶

If $\mathbf{u}$ and $\mathbf{v}$ are vectors in an inner product space $V$, then the cosine of the angle $\theta$ between the vectors is defined

$$ \cos(\theta) := \dfrac{\langle\mathbf{u}, \mathbf{v}\rangle}{\|\mathbf{u}\|\,\|\mathbf{v}\|} $$

To determine the angle between two vectors $\mathbf{u}$ and $\mathbf{v}$ in an inner product space $V$, one uses the principle arc cosine of the signed quotient to obtain an angle $\theta$ in the interval $[0,\pi]$. This does not mean that there is a physical interpretation of the angle between two vectors in an abstract inner product space. One of the most useful concepts of angle in an abstract inner product space is orthogonality. If this angle is $\frac{\pi}{2}$, then $\cos\left(\frac{\pi}{2}\right)=0$, so the inner product

$$ \dfrac{\langle\mathbf{u}, \mathbf{v}\rangle}{\|\mathbf{u}\|\,\|\mathbf{v}\|} = \cos\left(\frac{\pi}{2}\right) = 0 $$

Definition 5.2.4 Orthogonal¶

If $\ \mathbf{u}$ and $\mathbf{v}$ are vectors in an inner product space $V$ such that their inner product $\langle\mathbf{u}, \mathbf{v}\rangle = 0$, then the vectors are orthogonal.

Since the inner product of the zero vector with any nonzero vector in an inner product space will be the scalar zero, the zero vector is orthogonal to all nonzero vectors in an inner product space.

Moreover many key concepts of length and angle turn out to be true for abstract inner product spaces as well. If two vectors $\mathbf{u}$ and $\mathbf{v}$ are orthogonal in a vector space, then they form the two legs of a right triangle in the inner product space with hypotenuse

$$ \mathbf{p} = \mathbf{u} + \mathbf{v} $$

We use the terms right triangle, leg and hypotenuse loosely here because there is no way to draw an adequate picture of such a triangle in an abstract inner product space. However the picture in $\mathbb{R}^2$ suggests that the intuition about the relationships of the vectors is the same.

Theorem 5.2.1¶

The Pythagorean Rule

If $\ \mathbf{u}$ and $\mathbf{v}$ are orthogonal vectors in an inner product space $V$, then

$$ \left\|\mathbf{u} + \mathbf{v}\right\|^2 = \left\|\mathbf{u}\right\|^2 + \left\|\mathbf{v}\right\|^2 $$

Proof:¶

$$

\begin{align*}

\left\|\mathbf{u} + \mathbf{v}\right\|^2 &= \langle\mathbf{u}+\mathbf{v}, \mathbf{u}+\mathbf{v}\rangle \\

&= \langle\mathbf{u}, \mathbf{u}\rangle + 2\langle\mathbf{u}, \mathbf{v}\rangle + \langle\mathbf{v}, \mathbf{v}\rangle \\

&= \|\mathbf{u}\|^2 + 2\cdot 0 + \|\mathbf{v}\|^2 \\

&= \|\mathbf{u}\|^2 + \|\mathbf{v}\|^2

\end{align*}

$$

∎

Example 11¶

Consider the vector space $C[-1,1]$ with the inner product defined in Example 4. The vectors $f(x)=1$ and $g(x)=x$ are orthogonal because

$$ \langle 1, x\rangle = \displaystyle\int_{-1}^1 1\cdot x\,dx = \left[ \frac{x^2}{2} \right]_{-1}^1 = \frac{1}{2} - \frac{1}{2} = 0 $$

Computing the norms of these vectors one obtains

$$ \begin{align*} \langle 1, 1\rangle &= \displaystyle\int_{-1}^1 1\cdot 1\,dx = \left[ x \right]_{-1}^1 = 1 - (-1) = 2 \\ \\ \langle x, x\rangle &= \displaystyle\int_{-1}^1 x\cdot x\,dx = \left[ \frac{x^3}{3} \right]_{-1}^1 = \frac{1}{3} - \left(-\frac{1}{3}\right) = \frac{2}{3} \\ \\ \langle x+1, x+1\rangle &= \displaystyle\int_{-1}^1 (x+1)(x+1)\,dx = \left[ \frac{(x+1)^3}{3} \right]_{-1}^1 = \left[ \frac{2^3}{3} - \frac{0}{3} \right] = \frac{8}{3} \\ \\ \| 1\|^2 + \| x\|^2 &= 2 + \frac{2}{3} = \frac{8}{3} = \|x+1\|^2\qquad\LARGE{\color{green}\checkmark} \end{align*} $$

Example 12¶

In the vector space $C[-\pi,\pi]$ use the inner product defined in Example 5 using the constant weight function $w(t) = \frac{1}{\pi}$

$$ \langle\mathbf{f}, \mathbf{g}\rangle = \dfrac{1}{\pi}\displaystyle\int_{-\pi}^{\pi} f(t)g(t)\,dt $$

Consider the vectors $f(t) = \cos(t)$ and $g(t) = \sin(t)$.

$$ \begin{align*} \langle\cos(t), \sin(t)\rangle &= \dfrac{1}{\pi}\displaystyle\int_{-\pi}^{\pi} \cos(t)sin(t)\,dt = \dfrac{1}{2\pi}\displaystyle\int_{-\pi}^{\pi} \sin(2t)\,dt = \dfrac{1}{2\pi}\left[ -\dfrac{1}{2}\cos(2t) \right]_{-\pi}^{\pi} \\ \\ &= \dfrac{1}{4\pi}\left[ 1 - 1 \right] = 0 \\ \\ \langle\cos(t), \cos(t)\rangle &= \dfrac{1}{\pi}\displaystyle\int_{-\pi}^{\pi} \cos(t)\cos(t)\,dt = \dfrac{1}{\pi}\displaystyle\int_{-\pi}^{\pi} \cos(t)^2\,dt = \\ \\ &= \dfrac{1}{2\pi}\displaystyle\int_{-\pi}^{\pi} \left(1 + \cos(2t)\right)\,dt = \dfrac{1}{2\pi}\left[ t + \dfrac{1}{2}\sin(2t)\right]_{-\pi}^{\pi} \\ \\ &= \dfrac{1}{2\pi}\left[ \pi + \frac{0}{2} - \left( -\pi + \frac{0}{2} \right) \right] = 1 \\ \\ \langle\sin(t), \sin(t)\rangle &= \dfrac{1}{\pi}\displaystyle\int_{-\pi}^{\pi} \sin(t)\sin(t)\,dt = \dfrac{1}{\pi}\displaystyle\int_{-\pi}^{\pi} \sin(t)^2\,dt = \\ \\ &= \dfrac{1}{2\pi}\displaystyle\int_{-\pi}^{\pi} \left(1 - \cos(2t)\right)\,dt = \dfrac{1}{2\pi}\left[ t + \dfrac{1}{2}\sin(2t)\right]_{-\pi}^{\pi} \\ \\ &= \dfrac{1}{2\pi}\left[ \pi - \frac{0}{2} - \left( -\pi - \frac{0}{2} \right) \right] = 1 \end{align*} $$

Thus $f(t)=\cos(t)$ and $g(t)=\sin(t)$ are orthogonal unit vectors in this inner product space. They are orthogonal because their inner product is zero; they are unit vectors because their norms are one.

5.2.10 Orthogonal Projection¶

Like the definition for the angle between two vectors in an abstract inner product space, the projection is a formal definition that may or may not have a physical interpretation. However like orthogonality, projection has many useful applications in linear algebra.

Definition Orthogonal Component¶

If $\ \mathbf{u}$ and $\mathbf{v} \neq \mathbf{0}$ are two vectors in an inner product space $V$, then the component or scalar projection of vector $\mathbf{u}$ onto $\mathbf{v}$ is defined by

$$ \alpha = \text{Comp}_{\mathbf{v}}\mathbf{u} := \dfrac{\langle\mathbf{u}, \mathbf{v}\rangle}{\|\mathbf{v}\|} $$

Definition 5.2.5 Orthogonal Projection¶

The projection or vector projection of vector $\mathbf{u}$ onto $\mathbf{v}$ is defined by

$$ \mathbf{p} = \text{Proj}_{\mathbf{v}}\mathbf{u} := \alpha\left(\dfrac{\mathbf{v}}{\|\mathbf{v}\|}\right) = \dfrac{\langle\mathbf{u}, \mathbf{v}\rangle}{\|\mathbf{v}\|}\left(\dfrac{\mathbf{v}}{\|\mathbf{v}\|}\right) = \dfrac{\langle\mathbf{u}, \mathbf{v}\rangle}{\|\mathbf{v}\|^2}\mathbf{v} = \dfrac{\langle\mathbf{u}, \mathbf{v}\rangle}{\langle\mathbf{v}, \mathbf{v}\rangle}\mathbf{v} $$

Notice that for our vectors $\mathbf{u}$, $\mathbf{v}\neq\mathbf{0}$ and $\mathbf{p} = \text{Proj}_{\mathbf{v}}\mathbf{u}$,

$$ \begin{align*} \langle\mathbf{p}, \mathbf{p}\rangle &= \left\langle\dfrac{\alpha}{\|\mathbf{v}\|}\mathbf{v}, \dfrac{\alpha}{\|\mathbf{v}\|}\mathbf{v}\right\rangle = \left(\dfrac{\alpha}{\|\mathbf{v}\|}\right)^2 \langle\mathbf{v}, \mathbf{v}\rangle = \alpha^2 \\ \\ \langle\mathbf{u}, \mathbf{p}\rangle &= \left\langle \mathbf{u}, \dfrac{\alpha}{\|\mathbf{v}\|}\mathbf{v}\right\rangle = \alpha\dfrac{\langle\mathbf{u}, \mathbf{v}\rangle}{\|\mathbf{v}\|} = \alpha^2 \\ \\ \langle\mathbf{u}-\mathbf{p}, \mathbf{p}\rangle &= \langle\mathbf{u}, \mathbf{p}\rangle - \langle\mathbf{p},\mathbf{p}\rangle = \alpha^2 - \alpha^2 = 0 \end{align*} $$

The last equation proves lemma 5.4.2

Lemma 5.4.2¶

If $\ \mathbf{u}$, $\mathbf{v}\neq\mathbf{0}$ and $\mathbf{p} = \text{Proj}_{\mathbf{v}}\mathbf{u}$ are vectors in an inner product space $V$, then vectors $\mathbf{u}-\mathbf{p}$ and $\mathbf{p}$ are orthogonal.

Moreover, if $\mathbf{u}$ is an $\mathbf{v}$ are linearly dependent if and only if the angle between them is $0$ or $\pi$. Thus $\mathbf{u}$ and $\mathbf{v}$ are linearly dependent if and only if $\mathbf{u}$ is a scalar multiple of $\mathbf{v}$, $\mathbf{u}=\beta\mathbf{v}$. In this case

$$ \mathbf{p} = \dfrac{\langle\beta\mathbf{v}, \mathbf{v}\rangle}{\langle\mathbf{v}, \mathbf{v}\rangle}\mathbf{v} = \beta\mathbf{v} = \mathbf{u}. $$

Lemma 5.4.3¶

If $\ \mathbf{u}$, $\mathbf{v}\neq\mathbf{0}$ and $\mathbf{p} = \text{Proj}_{\mathbf{v}}\mathbf{u}$ are vectors in an inner product space $V$, then $\mathbf{u}=\mathbf{p}$ if and only if vectors $\mathbf{u}$ and $\mathbf{v}$ are linearly dependent.

5.2.11 The Cauchy-Schwarz-Bunyakovsky Theorem¶

Theorem 5.4.4¶

The Cauchy-Schwarz-Bunyakovsky Theorem

If $\ \mathbf{u}$ and $\mathbf{v}$ are any two vectors in a inner product space $V$, then

$$ \left|\langle\mathbf{v}, \mathbf{u}\rangle\right| \le \|\mathbf{u}\|\,\|\mathbf{v}\| $$

Proof:¶

If $\mathbf{v}=\mathbf{0}$, then

$$ \left|\langle\mathbf{u}, \mathbf{v}\rangle\right| = 0 = \|\mathbf{u}\|\,\|\mathbf{v}\| $$

If $\mathbf{v}\neq\mathbf{0}$, then define $\mathbf{p}:=\text{Proj}_{\mathbf{v}}\mathbf{u}$. Since vectors $\mathbf{p}$ and $\mathbf{u}-\mathbf{p}$ are orthogonal, the Pythagorean Law implies

$$ \|\mathbf{p}\|^2 + \|\mathbf{u}-\mathbf{p}\|^2 = \|\mathbf{u}\|^2 $$

Replacing vector $\mathbf{p}$ with its definition

$$ \begin{align*} \|\mathbf{p}\|^2 &= \langle\mathbf{p}, \mathbf{p}\rangle \\ \\ &= \left\langle\dfrac{\langle\mathbf{u}, \mathbf{v}\rangle}{\langle\mathbf{v}, \mathbf{v}\rangle}\mathbf{v}, \dfrac{\langle\mathbf{u}, \mathbf{v}\rangle}{\langle\mathbf{v}, \mathbf{v}\rangle}\mathbf{v}\right\rangle \ \\ &= \left(\dfrac{\langle\mathbf{u}, \mathbf{v}\rangle}{\langle\mathbf{v}, \mathbf{v}\rangle}\right)^2\langle\mathbf{v}, \mathbf{v}\rangle = \dfrac{\langle\mathbf{u}, \mathbf{v}\rangle^2}{\langle\mathbf{v}, \mathbf{v}\rangle} \\ \\ &= \dfrac{\langle\mathbf{u}, \mathbf{v}\rangle^2}{\|\mathbf{v}\|^2} = \|\mathbf{u}\|^2 - \|\mathbf{u}-\mathbf{p}\|^2 \end{align*} $$

Multiplying both sides of the last equation by the nonzero scale $\|\mathbf{v}\|^2$ yields

$$ \langle\mathbf{u}, \mathbf{v}\rangle^2 = \|\mathbf{u}\|^2\,\|\mathbf{v}\|^2 - \|\mathbf{u}-\mathbf{p}\|^2\,\|\mathbf{v}\|^2 \le \|\mathbf{u}\|^2\,\|\mathbf{v}\|^2 $$

Computing the square root of both sides of the last equation give us

$$

\left|\langle\mathbf{u}, \mathbf{v}\rangle\right| \le \|\mathbf{u}\|\,\|\mathbf{v}\|

$$

∎

Corollary 5.4.5¶

For nonzero vectors $\mathbf{u}$ and $\mathbf{u}$ in an inner product space $V$, the Cauchy-Schwarz-Bunyakovsky Theorem implies

$$ -1\le \dfrac{\langle\mathbf{u}, \mathbf{v}\rangle}{\|\mathbf{u}\|\,\|\mathbf{v}\|}\le 1 $$

Thus there is a unique angle $\theta\in[0,\ \pi]$ such that

$$ \cos(\theta) = \dfrac{\langle\mathbf{u}, \mathbf{v}\rangle}{\|\mathbf{u}\|\,\|\mathbf{v}\|} $$

This tells us that our the angle between two nonzero vectors in an inner product space is well-defined.

5.2.12 Norms¶

The magnitude or norm of a vector in a vector space can be defined independent of an inner product. In many applications, the magnitude of a vector is required without the notion of angle or direction.

Definition Norm of a vector¶

A function $\|\cdot\|\,:\,V\rightarrow\mathbb{R}^+$ that maps every vector $\mathbf{v}\in V$ to a nonnegative real number $\|\mathbf{v}\|$ is called a norm of a vector in vector space $V$ if for every vector $\mathbf{v}\in V$

$\|\mathbf{v}\|\ge 0$ with equality if and only if $\mathbf{v}=\mathbf{0}$.

$\|\alpha\mathbf{v}\| = |\alpha|\|\mathbf{v}\|$ for any scalar $\alpha\in\mathbb{R}$.

$\|\mathbf{v} + \mathbf{w}\|\le\|\mathbf{v}\| + \|\mathbf{w}\|$ for all $\mathbf{v},\ \mathbf{w}\in V$

A vector space together with a norm is called a normed linear space..

The third condition is called the definition of a norm is called the triangle inequality.

One should verify that the norm induced by an inner product satisfies the definition of a norm here. That is,

Theorem 5.4.6¶

Every Inner Product Space is a Normed Linear Space

Every inner product space $V$ is a normed linear space with its norm defined for every vector $\mathbf{v}\in V$ by

$$ \|\mathbf{v}\| = \sqrt{\langle\mathbf{v}, \mathbf{v}\rangle} $$

Proof:¶

Given an inner product space $V$, then for every pair of vectors $\mathbf{v}$, $\mathbf{w}\in V$, and real scalar $\alpha\in\mathbb{R}$,

$\|\mathbf{v}\|^2 = \langle\mathbf{v}, \mathbf{v}\rangle \ge 0$, with $\langle\mathbf{v}, \mathbf{v}\rangle = 0$ if and only if $\mathbf{v}=\mathbf{0}$ from property (1) of an inner product.

$\|\alpha\mathbf{v}\|^2 = \langle\alpha\mathbf{v}, \alpha\mathbf{v}\rangle = \alpha^2\langle\mathbf{v}, \mathbf{v}\rangle$.

Using the Cauchy-Schwarz-Bunyakovsky Inequality we have

$$ \begin{align*} \|\mathbf{v} + \mathbf{w}\|^2 &= \langle\mathbf{v} + \mathbf{w}, \mathbf{v} + \mathbf{w}\rangle \\ \\ &= \langle\mathbf{v},\mathbf{v}\rangle + 2\langle\mathbf{v},\mathbf{w}\rangle + \langle\mathbf{w}\mathbf{w}\rangle \\ \\ &\le \|\mathbf{v}\|^2 + 2\|\mathbf{v}\|\,\|\mathbf{w}\| + \|\mathbf{w}\|^2 \\ \\ &= \left(\|\mathbf{v}\| + \|\mathbf{w}\|\right)^2 \end{align*} $$

Computing the square root of both sides yields the triangle inequality

∎

Definition The Distance Between Two Vectors¶

For vectors $\mathbf{u}$ and $\mathbf{v}$ in normed linear space $V$, the distance from vector $\mathbf{u}$ to vector $\mathbf{v}$ is defined by

$$ \text{dist}(\mathbf{u},\mathbf{v}) := \|\mathbf{v} - \mathbf{u}\| $$

What about the converse proposition? Is every normed linear space also an inner product space? The answer is no. While every inner product induces a norm on a vector space, most norms are not induced by an inner product.

Corollary 5.2.7¶

Every Inner Product Space; that is every vector space $V$ accompanied by an inner product $\langle\cdot,\cdot\rangle:V\times V\rightarrow\mathbb{R}$ also has a well-defined definition of norm, angle, projection, component, and distance.

For any vectors $\mathbf{v},\mathbf{w}\in V$

$$ \begin{align*} \left\|\mathbf{v}\right\| &= \langle\mathbf{v},\mathbf{v}\rangle^{1/2} \\ \\ \cos(\theta) &= \dfrac{\langle\mathbf{v},\mathbf{w}\rangle}{\left\|\mathbf{v}\right\|\,\left\|\mathbf{w}\right\|} \\ \\ \text{Proj}_{\mathbf{w}}\mathbf{v} &= \dfrac{\langle\mathbf{v},\mathbf{w}\rangle}{\langle\mathbf{w},\mathbf{w}\rangle}\mathbf{w} \\ \\ \text{Comp}_{\mathbf{w}}\mathbf{v} &= \dfrac{\langle\mathbf{v},\mathbf{w}\rangle}{\left\|\mathbf{w}\right\|} \\ \\ \text{dist}(\mathbf{v},\mathbf{w}) &= \left\|\mathbf{w} - \mathbf{v}\right\| \end{align*} $$

5.2.13 p-Norms¶

Example 13¶

Show that the function $\|\cdot\|_1\,:\mathbb{R}^n\rightarrow\mathbb{R}^+$ for every vector $\mathbf{x}\in\mathbb{R}^n$ by

$$ \|\mathbf{x}\|_1 := |x_1| + |x_2| + |x_3| + ... + |x_n| = \displaystyle\sum_{i=1}^n |x_i| $$

This function defines a norm on $\mathbb{R}^n$. We need to show that $\|\cdot\|$ satisfies all three conditions of the definition of a norm on vector space $\mathbb{R}^n$. For every pair of vectors $\mathbf{x}$, $\mathbf{y}\in\mathbb{R}^n$ and real scalar $\alpha\in\mathbb{R}$,

$\|\mathbf{x}\| = \displaystyle\sum_{i=1}^n |x_i| \ge 0$ since it is a sum of nonnegative real numbers. Moreover if $\|\mathbf{x}\|=0$, then $|x_i|=0$ for all $1\le i\le n$, so $x_i=0$ for all $1\le i\le n$. Hence $\mathbf{x}=\mathbf{0}$.

$\|\alpha\mathbf{x}\| = \displaystyle\sum_{i=1}^n \left|\alpha x_i\right| = \displaystyle\sum_{i=1}^n |\alpha||x_i| = |\alpha|\displaystyle\sum_{i=1}^n |x_i| = |\alpha|\|\mathbf{x}\|$

Using the triangle inequality of real numbers

$$ \|\mathbf{x} + \mathbf{y}\| = \displaystyle\sum_{i=1}^n |x_i + y_i| \le \displaystyle\sum_{i=1}^n \left(|x_i| + |y_i|\right) = \displaystyle\sum_{i=1}^n |x_i| + \displaystyle\sum_{i=1}^n |y_i| $$

Definition $p$-norm¶

For any real number $p\ge 1$, one can define a norm on the vector space $\mathbb{R}^n$ called a $p$-norm, such that for every vector $\mathbf{x}\in\mathbb{R}^n$,

$$ \|\mathbf{x}\|_p := \left(\displaystyle\sum_{i=1}^n |x_i|^p \right)^{1/p} $$

Example 14¶

In particular, for $p=2$, the $2$-norm is given by

$$ \|\mathbf{x}\|_2 := \left(\displaystyle\sum_{i=1}^n x_i^2 \right)^{1/2} = \sqrt{\langle\mathbf{x}, \mathbf{x}\rangle} $$

The 2-norm is the only p-norm induced by an inner product, the Euclidean inner product.

Definition¶

The uniform norm or infinity norm is defined on the vector space $\mathbb{R}^n$ for every vector $\mathbf{x}\in\mathbb{R}^n$ by

$$ \|\mathbf{x}\|_{\infty} := \text{max}\left\{ |x_i|\,:\,1\le i\le n \right\} $$

In general, the Pythagorean Rule will not hold for an inner product that is not derived from an inner product. Define $\mathbf{x}_1$ $\mathbf{x}_2\in\mathbb{R}^2$ by

$$ \mathbf{x}_1 = \begin{bmatrix} -2\ \\ \ \ 2\ \end{bmatrix}\ \text{and}\ \ \mathbf{x}_2 = \begin{bmatrix}\ \ 1\ \\ -3\ \end{bmatrix} $$

Using the uniform norm

$$ \|\mathbf{x}_1\|_{\infty}^2 = 4\ \text{and}\ \ \|\mathbf{x}_2\|_{\infty}^2 = 9 $$

but,

$$ \|\mathbf{x}_1 + \mathbf{x}_2\|_{\infty}^2 = 1 \neq 4 + 9 $$

Exercise 3¶

Let $\mathbf{x}=\begin{bmatrix} -2\ \\ \ \ 4\ \\ -1\ \end{bmatrix}$. Compute $\|\mathbf{x}\|_1$, $\|\mathbf{x}\|_2$, $\|\mathbf{x}\|_3$, and $\mathbf{x}_{\infty}$.

Follow Along

$$ \begin{align*} 1.&\ \|\mathbf{x}\|_1 = |-2| + |4| + |-1| = 7 \\ \\ 2.&\ \|\mathbf{x}\|_2 = \left( 4 + 16 + 1 \right)^{1/2} = \sqrt{21} \\ \\ 3.&\ \|\mathbf{x}\|_3 = \left( 8 + 64 + 1 \right)^{1/3} = \sqrt[3]{73} \\ \\ 4.&\ \|\mathbf{x}\|_{\infty} = \text{max}\left\{ |-2|, |4|, |-1| \right\} = 4 \\ \end{align*} $$